In the last section we focussed on what networks are, why they are used and covered a broad range of related topics such as how networks are categorised, topologies, performance factors, internet technologies and more. The second half of this part of the GCSE specification concentrates almost solely on how data is transmitted through a network, regardless of the connection type used.

As you may well be aware, there are multiple ways in which devices may be connected together physically, for example wirelessly. Furthermore, there are an almost limitless number of different tasks that a device may be carrying out on a network, for example acting as a server or streaming video.

Each task is different and places different demands and priorities on the network. For this reason, a range of methods and rules are used to ensure that traffic, regardless of type or purpose, is able to be reliably and securely sent from source to destination.

In this section (click to jump):

Wireless Transmission – Wifi

Wireless transmission of network data really did change the entire way we use computing devices. Without wireless we wouldn’t have any of the portability we take for granted today and you certainly wouldn’t be able to update the world on important, life changing events on social media no matter where you were, such as next time you notice it’s snowing and decide to tell everyone else in case they hadn’t noticed, even though they too have eyes and can experience such crazy phenomena such as the weather by just looking out of the window, into the dark, dangerous real world outside…

Wireless network connections are often referred to as WiFi – which stands for “Wireless Fidelity.” No, I don’t understand why either. WiFi is a set of standards for transmitting data using radio waves to computer devices in the place of a physical cable. It is designed to work using the same networking methods or protocols as a wired connection.

To summarise, WiFi is:

- A set of standards, IEEE 802.11 to be precise.

- Which dictate how computers should be connected without wires

- It includes methods of encrypting and securing data which is sent

- Also states which frequencies should be used for transmission of data (2.4Ghz and 5Ghz bands)

Wireless communication is now so ubiquitous that it can be found in everything from computers, consoles and tablets all the way down to children’s toys. Whilst WiFi is undoubtedly very convenient and carries with it a wealth of advantages over a wired connection, it is not perfect and there are some significant disadvantages.

WiFi Advantages:

- No need to connect using cables

- Devices can be located anywhere where there is sufficient signal strength – flexibility

- Easy to connect new devices

- Cheaper to install a wireless access point than to run cables to every device that needs to connect

WiFi Disadvantages:

- Signals are badly affected by distance

- and solid objects

- and interference from other devices/electronics

- Security – anyone can intercept wireless data/traffic, although it should be encrypted if you are using a secure connection

These disadvantages are too significant to simply state and move on from. Range and interference can be dealt with in a number of ways, but to understand how requires an in depth conversation about radio waves and how they work. Security is a massive issue as anyone can intercept wireless network traffic and there is no way to tell that it has happened.

Wireless Security

WiFi is inherently less secure than Ethernet. Why? Because you can easily intercept packets of data by just sticking an antenna in the air, whereas with Ethernet you’d need to physically plug yourself in to the network.

Consequently, it was necessary for WiFi to be developed alongside some form of encryption that could be applied to connections. Multiple methods of securing connections between wireless devices have been devised over time such as the initial WEP and now WPA, which stands for Wifi Protected Access. The current standard for encrypting and securing wireless data called WPA3, although the world is still full of WPA2 devices.

WPA2 Provides:

- An implementation of the 802.11i standard (you can’t get enough 802.11 – look it up it’s truly fascinating reading.)

- Per packet encryption

- 256 bit encryption key

In case you were wondering how secure 256 bit encryption is, if you were to try and guess the key by brute force (trying different combinations until you happened to be successful) then you would potentially have to try 115792089237316195 42357098500868790785 32699846656405640394 57584007913129639936 different combinations. I don’t know about you, but I don’t even know how to pronounce a number so large.

By the time you and the fastest computer on earth had tried all of those combinations you would almost certainly be dead, the universe would probably have ended too and you would go down in history as one of the least interesting people ever to have lived.

Encryption

Encryption is covered in more detail in 1.4.2 – Identifying and Preventing Vulnerabilities. It doesn’t make sense to repeat the information twice, so for now, here’s a very brief summary:

Encryption is the process of scrambling data so that if it is stolen or intercepted then it will mean nothing without the key to decrypt it. This should keep data safe even if someone manages to gain access to physical hardware or your network.

What is it?

- Encryption scrambles data using a complex, one way algorithm.

- This encryption algorithm uses a “key” to encrypt the data

- The data is scrambled and cannot be accessed without another, different key to “unlock” it.

- A different key is required to decrypt data

How does it work?

- The recipient of a file makes available a “public key“

- The sender encrypts the data to be sent using this public key. This key can be used by anyone to encrypt data – it’s public!

- However, this key cannot be used to decrypt the data! It is a one way process

- The scrambled data is sent to the recipient

- The recipient uses their “private key” to decrypt the data so they can view it

- The recipient keeps their private key secret – if it were to be revealed then anyone could unlock/decrypt data sent to them.

How does it prevent attacks or help us recover from an attack?

- Decryption makes sending data over a network more secure as even if a connection is compromised then the data should remain unreadable.

- Encryption buys us time to make changes, change passwords or make other steps to secure data before the encryption is broken

Wifi Standards

Wireless standards are all in the 802.11 standard set and each one defines how connections are to be established, how the sending and receiving of data should work and also how fast that transmission can happen.

- 802.11b – 11mbps (very slow)

- 802.11g – 54mbps

- 802.11n – 600mbps

- 802.11ac – 1300mbps

- 802.11ax (or WiFi 6e as it’s known) – up to 9608mbps depending on hardware used (stupidly fast)

Each of these wireless standards is affected by transmission frequencies that have been allocated to their use. It may sound strange, but the transmission of data, TV and Radio signals are very tightly regulated. You are not allowed to simply pick a frequency of your choice and start sending out transmissions.

Regulators have assigned two main frequency bands for wireless network connections, these are 2.4Ghz (gigahertz) and 5Ghz

- 2.4Ghz is generally a slower, more stable signal and can be sent longer distances

- 5Ghz is generally faster but is more affected by objects, distance etc

Each band is split into a number of channels to try and separate out traffic on the network, especially in areas where there may be multiple wireless networks (think about how many networks you can pick up around your house from your neighbours). However, those channels do overlap in 2.4Ghz transmissions and furthermore, everyone with a wireless network is attempting to use the same available frequencies. Therefore, one big problem in wireless networking can be poor performance in congested areas where there is simply too much activity on each channel/band.

Before we go any further, we need to understand a little bit more about radio waves so we can both understand what these 2.4 and 5Ghz frequencies actually are and so we can talk about some issues we face when using wireless networks and transmission.

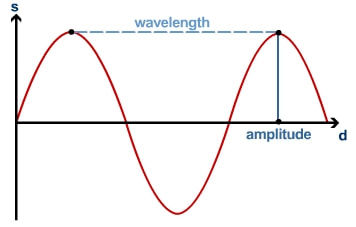

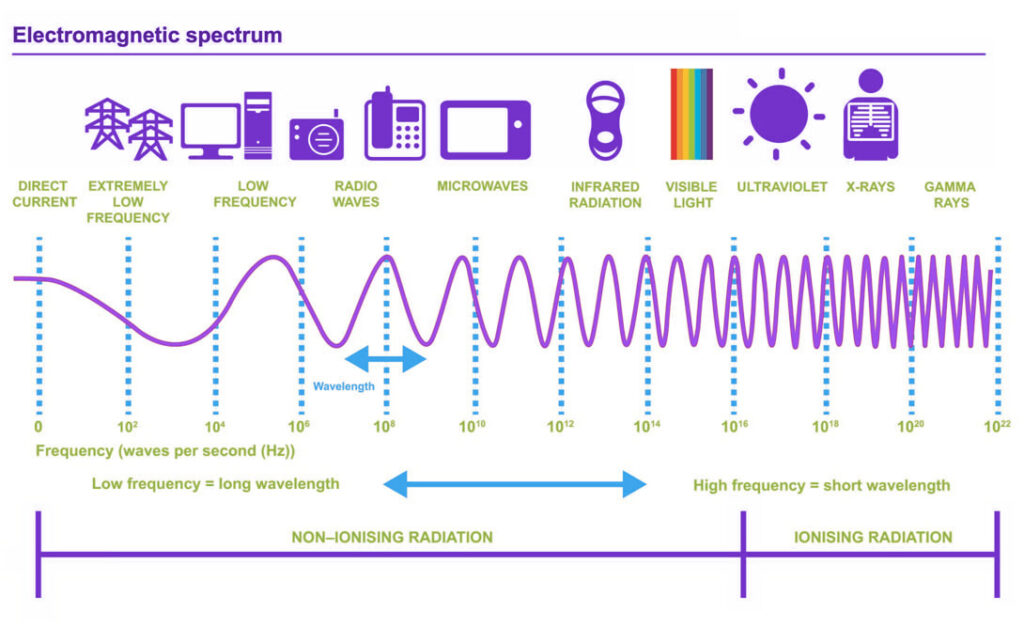

In Science lessons you should have come across waves and the terminology used to describe them. In the diagram above, you can see that the wave flows up and down across the X axis. This up and down wave motion is called an “oscillation.”

The number of times a wave goes up and down (oscillates) during a second is called the frequency of the wave – literally “how many times it oscillates.” To complete the picture, the peaks of these wave oscillations (the very top or the very bottom) can be far apart or close together. The distance between two peaks is called the wavelength.

Finally, the peak of a wave may be very high and steep, or very low and shallow. The height of the wave from the X axis is called the amplitude of the wave.

The combination of these three features – oscillation, wavelength and amplitude give the wave its properties. In other words, changing these three things will change the behaviour of a wave and what it does.

Without bending your mind with science too much, lots of things in the universe are a form of electromagnetic waves:

- X-Rays

- Microwaves

- Visible Light

- Radio Waves

The only thing which differentiates this electromagnetic energy is…. the wavelength or frequency of the waves.

Frequencies

As we now know, WiFi is allocated the 2.4Ghz and 5Ghz frequency bands. In the diagram above you can see this sits somewhere between radio waves and microwaves.

To summarise:

- Electromagnetic waves cover a broad spectrum of frequencies. The frequency of a wave/transmission refers to how quickly the wave oscillates

- This oscillation for WiFi is measured in Gigahertz (Ghz) – 2.4Ghz or 5Ghz

- To receive data your wireless card literally “tunes in” to a frequency and listens to it in almost exactly the same way you tune your radio to a station to receive it.

- And just like there are many radio stations on many different frequencies, there are many different frequencies within each band that WiFi can be transmitted on.

As with everything in computing, wireless transmission is a trade off between performance (speed) and other factors such as the distance you can transmit data over.

Unlike a cabled connection, waves are affected by their surroundings. Whilst a 2.4 or 5Ghz wave can absolutely pass through apparently solid objects such as walls, they are significantly weakened when they do so. Other factors such as conductive materials (the metal frames buildings are made from, for example) can also significantly affect the strength and quality of a wave.

Whilst neither band is perfect, 5Ghz is the future of WiFi as its strengths far outweigh any weaknesses. These advantages and disadvantages are summarised below and are necessary for you to understand in your exam.

Advantages of 2.4Ghz

- Can travel long distances

- Less affected (not immune!) by physical objects

Disadvantages of 2.4Ghz

- Transmission speeds are slower than 5Ghz

- Has only three non-overlapping channels (see below)

- More affected by congestion where there are multiple wireless networks in a small area

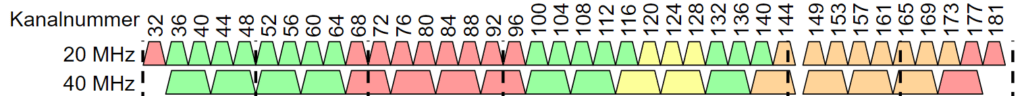

Advantages of 5Ghz

- Very high transmission speeds

- Many more channels available to avoid congestion

- Channels can be combined to create higher bandwidth

Disadvantages of 5Ghz

- Much more affected by physical objects

- Does not cover as great a distance as 2.4Ghz

WiFi is fairly clever in that you can have multiple networks all transmitting and receiving on the same frequency. If you did this with radio, it’d sound really weird because you’d be listening to two completely different stations at exactly the same time!

Your wireless card or access point is clever enough to be able to filter out some of the “noise” of other networks it is not currently connected to. However, no amount of clever noise cancelling and error correction algorithms are going to handle this noise beyond a certain point. This explains why if there are a lot of wireless networks in a small space then it can actually have a drastic effect on performance and make things go very slowly – this also goes some way to explain why you can never, ever get 4G on your phone working in a football stadium during a game…

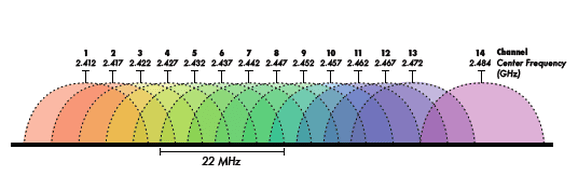

To mitigate this interference, in each band whether that is 2.4 or 5Ghz there are multiple WiFi channels that can be used to separate networks out – very much like radio stations are all on slightly different frequencies so they do not interfere with each other.

Channels:

- The frequencies that WiFi can used are split down into smaller ranges – each one becomes a “channel”

- If several networks are using the same channel then data will be subject to interference and this causes slow downs

- However, most wireless Routers or Access Points are now intelligent enough to switch channels if interference is bad…

- …this dynamically and actively reduces interference and should improve performance.

- The downside is that on 2.4Ghz networks they overlap so there are actually only ever 3 channels in any given area that are completely separate. See below:

As you can see in the diagram above, whilst there are 14 channels, they all overlap. There are only three channels which are truly separated from the others in the 2.4GHZ wireless range. 5GHZ solves this problem by having far, far more channels available which can keep traffic separated.

Ignoring the fact the diagram above is in Danish (Kanalnummer = Channel number), you can see there are vastly more channels available for 5Ghz connections than 2.4Ghz

Bluetooth

Bluetooth is a standard for creating short range wireless connections between devices. It is largely used for peripherals such as remote controls, games console controllers, wireless headphones and so forth.

Bluetooth shares many similarities with WiFi in that data is sent using packets, it uses the 2.4Ghz frequency band to transmit data and the frequency range it uses is split up in channels so that multiple devices can operate in the same area.

The main difference is in the transmission power used. Bluetooth is an extremely low power standard, meaning that signals are transmitted or received over no more than 10 meters or so. This is more than enough for wireless speakers, headphones or remote controls which must only operate over the distance of the average room.

Bluetooth is ideal for mobile, battery powered devices due to its low power consumption and small transmission distances meaning it does not interfere with other devices in the vicinity. Bluetooth networks tend to be “ad-hoc” meaning they are set up between clients as and when they are needed and are discarded when they are not needed any longer.

IP and MAC addresses

In 1.3.1 – Networks and Topologies, we talked about how data is sent through a network by splitting it up into packets that are then routed (by routers, no less) through the internet. In order to identify routers and servers connected to the internet, each one is allocated an IP address. It seems confusing to then learn that devices connected to a network actually have two addresses that identify them on a network! Why?!

IP addresses are used in routing – the act of finding a path through a network to a destination device. The alternative, MAC addresses, are used by switches to find a specific device on an internal network – you can think of it as the final stage in the journey, ensuring data is delivered to the right place.

There are some key differences between the two types of address and we look at each of them in turn below.

IP Addresses

To summarise our existing understanding, you should know that:

- Devices are connected to networks using either wires or wireless access points (radio waves)

- Data that is sent is split in to packets

- These packets are delivered to devices on internal LANs by switches…

- …or moved closer to their destination in a WAN by routers

Every packet sent through a network will have a destination and source IP address written in to the header data of each packet. This is so any router knows where the packet should be delivered to and also where it came from.

Each device connected to a network is given an IP (Internet Protocol) address. An IP address looks something like this:

IP addresses have a very specific structure:

- There are always 4 numbers, separated by dots

- Each number can be anything from 0 to 255

- These numbers are…. 8 binary bits! Remember the biggest number we can store in binary with 8 bits is 255.

- So, therefore, an IP address is 32 bits long in total

- IP addresses can be anything in the range 0.0.0.0 to 255.255.255.255

The internet is a fluid, dynamic network. Devices are constantly being connected or disconnected from the network, which is one small part of the reason why no one knows what it really looks like and we call it “the cloud.” As a consequence, the IP protocol allocates IP addresses on a temporary basis, they are “leased” to devices that ask for them. This means that IP addresses allocated to a device connected to a network can, and do, change.

Furthermore, as IP addresses are limited to the range 0.0.0.0 to 255.255.255.255 (and some of these are actually reserved for special purposes) there are not enough IP addresses to simply assign one to each device permanently. There are many clever tricks that have been implemented in networking to get around this lack of IP addresses.

- IP addresses are dynamically assigned. This means automatically given out by Routers and Switches when you connect to them

- Because they are dynamic they can change.

- This means you can’t use an IP address to identify an actual piece of hardware – you only know that an IP address is a destination on a network.

- There are approximately 4.2 billion possible IP addresses. This means there are not enough for all the devices that are/could be connected to the internet!

The IP addresses we have been looking at are specified in version 4 of the IP protocol, they are “IP V4” addresses. The problem of running out of IP addresses had been known about long before it actually happened and work began on a new version of IP which would solve this issue – IP V6. Do not ask me what happened to version 5, perhaps it was late to dinner.

IP V6 addresses are longer and consequently we are never, ever going to run out of them as there are enough to allocate an IP V6 address to pretty much every particle in the universe. IP V6 addresses are 128 bits in length versus the 32 bits used for IP V4.

IP V4 offers 4.2 billion unique addresses, whereas IP V6 has… wait for it…

The only issue with IP V6 is that there is a lot of networking hardware out there in the world (understatement of the year award). Network equipment tends to last a long, long time and therefore the transition to new hardware that supports and actually uses IP V6 has been extraordinarily slow and painful, but it is the future.

To summarise:

- When you connect to a network, a switch or router gives your device an IP address

- This IP address is not a unique identifier for your device and can change – they are dynamic

- The IP address is used to route packets to a destination device connected to a network

- Packets contain a source and destination IP address in their headers

- IP addresses are 32 bit, written in decimal, separated by dots – e.g. 192.168.0.1 (which will probably take you to your router log in page if you type it in to a browser…)

- We can and will run out of IP V4 addresses

- The solution is IP V6.

MAC Addresses

The eagle eyed amongst you, when reading about IP addresses, should’ve asked the question “if IP addresses don’t uniquely identify a device, what does?”

More to the point, you should also have asked “…and how do we know one device from another?”

Or “How come if I have a the latest iPhone and my friend has the exact same one, how does a network know which is which?!”

And you would have a point. The answer, of course, is MAC addresses.

To define, the term MAC Address means Media Access Control Address.

We’re still none the wiser are we?

A MAC address is simply a unique identifier given to any device which is capable of connecting to a network. Therefore, anything that has wireless or wired capability has a MAC address.

A MAC address looks something like this:

This looks significantly different to an IP address and that’s because… it is. But not as much as you might think.

Due to convention, instead of writing down MAC addresses in decimal/denary as we do with IP addresses, each 8 bit section is written as a two digit hexadecimal number. This is probably to do with the length of a MAC address compared to an IP address and therefore we are less likely to make mistakes when reading out a MAC address written in hexadecimal

MAC addresses consist of:

- 6 “octets” separated by colons “:”

- Each octet may be any number from 0-255 (like an IP address)

- Each octet is written in Hexadecimal (which explains all the letters). If you’re not sure what that is, look at number systems here.

Some facts about MAC addresses that you might find really useful in the exam:

- They are totally unique and never change. If you throw away a device, the MAC address dies with it.

- It is used to uniquely identify each device on a network

- There are 2^48 possible MAC addresses (281,474,976,710,656 possible MAC addresses) so we are not going to run out of them in the near future.

- A MAC address is given to a device when it is manufactured – unlike IP addresses which can be assigned every time you join a network.

When a device is connected to a network, it is assigned an IP address so that data can be routed to it through the internet. However, a Switch will then translate this IP address into a MAC address to ensure that packets are delivered to their final destination.

This explains why your home internet can be shared so easily:

- You have only one internet connection at home

- Which means you are only given one IP address for your house

- Your switch uses MAC addresses to ensure that data is directed to the right device when it arrives

Standards

We could define what standards are by simply saying “Standards are a set, fixed method or way of doing something.”

That, whilst completely true, doesn’t help us to understand why they exist nor why they are so important in our every day lives.

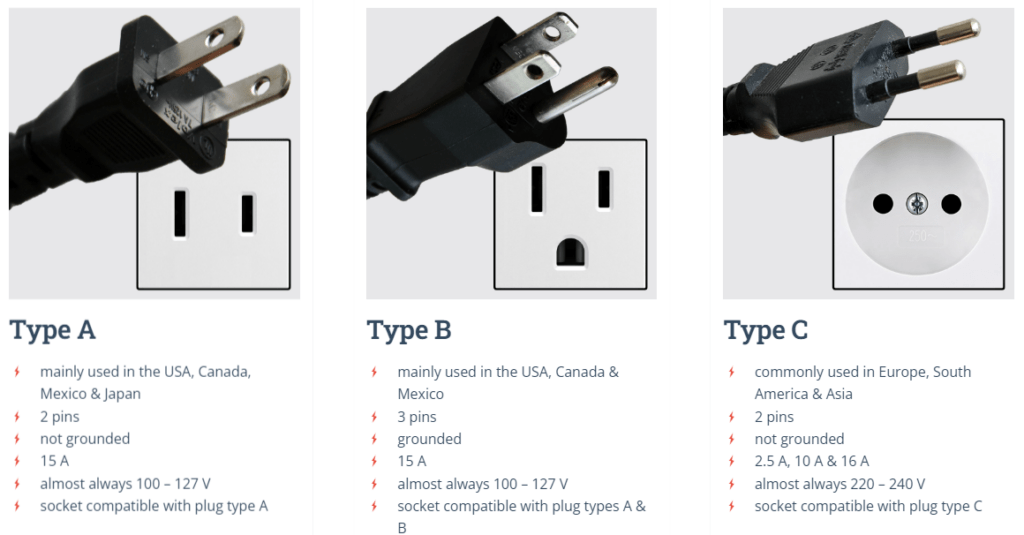

You will be well aware that there is always more than one way of doing something. If you’ve ever been abroad, then you’ll know that even something as commonplace as sending electricity to an appliance is totally different in other countries than it is in the UK.

Having different methods or standards for doing the same thing is fine in some respects – each country sticks to one type of plug within their borders. However, it starts to fall apart and cause problems on a global scale.

The ultimate goal of having standards or standardising the way we do things is to end up with one, single method of doing something. This has the distinct advantage that, no matter where you are in the world, which language you speak, which manufacturer made your product – it will just work.

Doing things in a standard way has many distinct advantages:

- It reduces costs – you do not need to develop your own solution to a problem

- It creates compatibility – your products work with those from other manufacturers

- It is convenient – people know how things work and what to expect

- It usually results in cheaper products for consumers leading to wider adoption

Some companies don’t like standards. It costs many millions to create a new product and, understandably, a business will want to maximise its investment. If you use standards then anyone can create a competing product with the same features. If you use proprietary technology (meaning you do not use a freely available standard or you refuse to share your methods) then you can exclusively sell a product.

Sometimes this approach works, but more often it does not. Computing and technology history is littered with examples of great proprietary products that are now no longer relevant.

One famous example is Sony who created the first decent video recorder. They called their technology Betamax and these were fantastic products. Betamax videos were small, compact and created very high quality recordings of your favourite TV show. However, they were very expensive, around £500 in the early 1980’s which is roughly somewhere around £3 trillion in todays money.

Sony refused to share or sell their Betamax technology to anyone else and so competitors came up with their own version called VHS, short for Video Home System. VHS tapes were larger than Betamax and produced inferior quality recordings, however, because VHS was an open standard meaning anyone could make a VHS recorder or VHS tapes, they quickly became the most popular choice and Betamax was dead in the water despite its superiority.

What has this got to do with computers and networking? Everything.

At the same time as Betamax, in the 1980’s, home computers were all the rage. They were available from a variety of once famous brands such as Commodore, Amstrad, Sinclair, Acorn and so on. Each one worked in a completely different way despite all using very similar CPU, memory and other technology. These computers could not easily talk to each other and were completely incompatible.

In 1980, IBM decided to enter the market for “mini” computers – machines that could fit on your desk rather than take up an entire room to themselves. IBM made the decision that in order to cut costs, they would use only off the shelf, widely available components in their new IBM PC as it was to be called. This meant they were making a machine that used standard, well understood components.

The magic that tied this system together was something called the “BIOS.” This is a clever piece of software which kicks in when the power is turned on and basically makes the computer work to the point where it can load software and be used. IBM kept this secret to themselves and were soon selling hundreds of thousands of computers.

Other companies quickly worked out how the IBM BIOS worked and made their own versions and copies, all of a sudden, the computer world was being standardised almost completely by accident. IBM “compatible” computers flooded the market and soon any business machine was either an IBM PC or a PC compatible – the Personal Computer had become the de facto standard in computing.

The advent of the IBM PC and its clones marks the point in history where standards became more important than who manufactured a device. By standardising computing, the world had taken a massive leap for the following reasons:

- Software could be written for one single platform, meaning it could be written once and run on many different machines, made by many different manufacturers

- Computers could communicate as they all used the same or similar components – this made networking simple

- IBM PC’s were modular, meaning you could plug in expansion cards which added new features, making it possible to customise a machine for a particular purpose.

Ultimately, we arrive at the present day. A time where, because of standards being adopted across the world, we are able to cheaply build devices of all types, sizes and purposes which can communicate over the internet. Without standards, there would be no internet, no global communication or information sharing.

All of this, just because we agreed to a set of rules for how computers should talk to each other.

Protocols – Implementing Standards

Computing today is dominated by standards. In this section we are now going to focus solely on standards associated with communicating on a network. These standards for network communications are called “protocols.”

Definition: A protocol is a set of rules which establish how communication between two devices should happen.

Protocols define absolutely every last tiny detail of what can, could and might happen during a communication. Everything from how to initiate a connection, how to confirm you have received data, what to do if there is a mistake or error. Protocols leave nothing to the imagination, nothing to chance and there is no allowance for interpretation. In other words, the rules are the rules and that’s it!

Because protocol descriptions and rules can very quickly become large and complex in nature, something broad like “connecting to the internet” or “requesting and displaying a web page” will be broken down into manageable chunks called “layers” – we are going to discuss these in more detail in the next section. Layers are usually descriptions of tasks that have to be carried out, such as “link” a connection or to create a “session” between a client and server.

However, even layers are too vague and broad and so each layer will be broken down even further into very clear, distinct tasks that must be carried out and these are described by individual protocols.

An individual protocol might define the rules for:

- How devices establish a communication link

- How data should be sent and received (including how it is broken up and re-assembled)

- How data is routed around a network

- How devices identify themselves and connect to a network

- How errors and data corruption should be handled

A protocol may be implemented (carried out) by either hardware, for example a network card, or in software, for example a web browser or operating system.

Reducing Complexity – Layers and the TCP/IP Model

Networking, as we so sweepingly call it, is actually extremely complex to manage at a hardware and software level. The internet as we know it today is the result of multiple technologies having developed and evolved over time and eventually merged together as the accepted standard way of communicating.

Sending data across a network is not a case of “here you go, send that down the wire.” If we break down the problem of connecting computers together in order to send and receive data, we may possibly describe the main issues facing us as follows:

- Software – which programs want to use networking and communication features?

- Network Management – how do we establish connections, split up and send data, manage connections between devices?

- Physical Networking – how do we actually connect devices at a physical level? How do we transmit electrical signals that represent data? How do we speed up transmission and avoid data loss?

There are, clearly, a lot of questions to be answered!

As such, those involved in networking quickly came up with a conceptual model called the “TCP/IP model” and also the “OSI Model” to try and describe what will happen at each significant stage of creating a network connection, sending data and receiving data.

In the exam, we are expected to have a broad overview of the TCP/IP model. To put it in simple terms, TCP/IP is the model which describes how internet connections are established and used. The “TCP/IP stack” as it is called, describes how data is presented, prepared and transmitted through a network (the internet). When you send data, it travels down through the layers and is then sent. When data is received, it travels through the stack from bottom to top to be reconstructed and displayed.

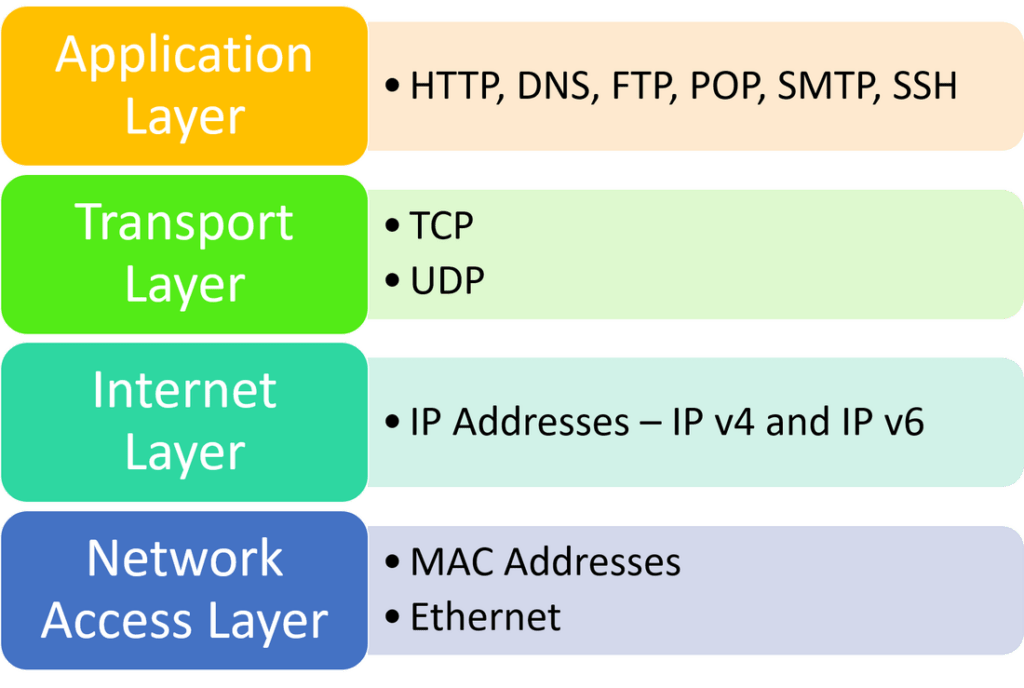

TCP/IP is split into four main layers:

TCP/IP at this “layer level” shows how a network connection should be organised and gives an idea of what happens at each stage. Each layer of the model contains many protocols – the things that rigidly define how a particular part of the stack will work.

Breaking up a significant challenge such as “making the internet work” into a model like this has some significant advantages:

- Developers of both hardware and software may focus on one particular layer, without having to worry about the others. This saves time and money.

- Each layer can be robustly defined in terms of what it does and how it functions. This is the whole purpose of standards! Everyone knows what should happen at each layer or in each protocol.

- Scalability – new protocols may be added in each layer to add new functionality and features.

In the exam, you are not expected to draw the TCP/IP stack nor to name its layers. However, you do need to know what a layer is and why conceptual models break up networking tasks into layers.

Definition of layers:

- Layers divide the design of a set of related protocols into small, well defined pieces.

- Each layer above a lower layer adds to the services provided by the lower layers

- Each layer is independent of other layers and clearly defines the services it provides. No layer needs to know HOW another layer works, only what to pass to it or expect from it.

The big advantage of layers is that they allow us to make changes in one layer without affecting other layers!

Ethernet

Ethernet is arguably the standard protocol on which all other networking protocols rely on. Remember, a protocol is a set of defined rules and methods for sending and receiving data on a network and if you adhere to these standards, your device will be able to communicate with any other device which also adheres to these standards.

Ethernet is IEEE standard 802.3. Now you know that, you can wow your friends, parents or pet trifle with your new found knowledge.

But, what does this actually mean?

Ethernet is:

- A set of standards

- Which dictate how computers should be physically connected…

- …and exactly how data will travel between those devices.

- More secure than wireless because it uses physical connections.

Ethernet is found at the very bottom layer of the TCP/IP model and for some reason, OCR have picked it out as a protocol you should be especially aware of. They are also extremely picky about the use of the word Ethernet, so let us establish one important point right now:

In your exam you are to refer to Ethernet as a protocol only.

You are NOT to ever use the word Ethernet in place of “network cable.”

Yes, I am fully aware that every person and their dog says “ethernet cable” when they mean “network cable” but we cannot use this phrase. That is what the good people at OCR have decided and we must play their game to the fullest of our ability.

It will be sufficient for your exam for you to summarise Ethernet in the following way:

Ethernet identifies network devices by their MAC addresses. It is responsible for establishing the physical connection (at an electrical signal level) between devices on a network. The Ethernet standard defines and specifies the type of cables that must be used and how data is to be transmitted down those cables in the form of “frames” of data. A frame, at GCSE level, is simply a collection of data encapsulating a packet from higher layers which is to be sent across a connection.

Ethernet has several advantages:

- It is the standard for network communication and as such virtually all devices that are networked use these standards. Manufacturers will always adhere to the Ethernet standard when making a device with wired connectivity.

- Compatibility – Any device with an RJ45 network socket will support the Ethernet standard

- Speed – it’s silly fast – speeds of up to 100 Gigabits can be achieved

- Security – you have to physically connect to the network to intercept packets

- Reliability – the use of packets and error correction methods means data can be sent quickly and reliably on the network

Disadvantages:

- Ethernet can be affected by interference on or around cables which may disrupt data transmission

- Distance – Ethernet cables have a maximum sensible length of 100m before the signal degrades too much to be useful.

Other protocols you need to know about

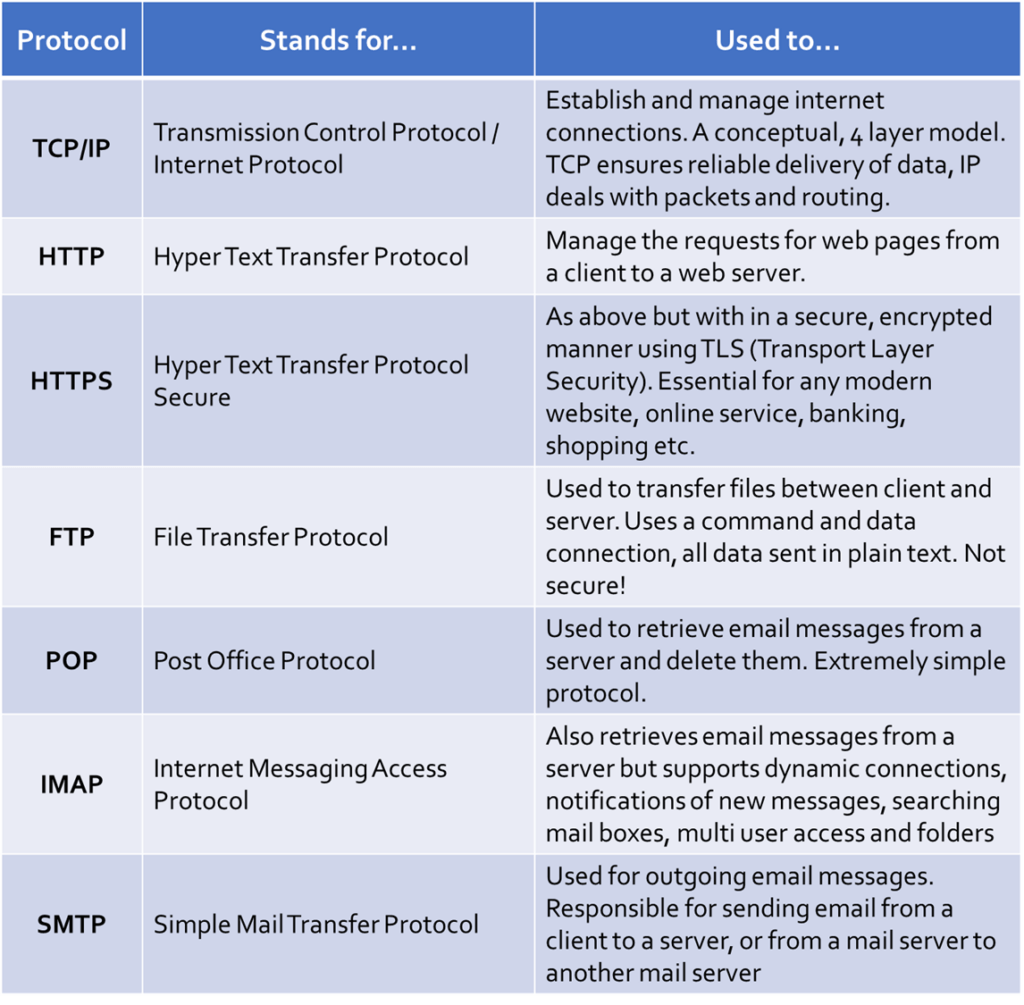

The topic of protocols and layers is an absolute favourite on the OCR GCSE, however due to the complexity of protocols, you are only required to understand the briefest of overviews for each one. Below are all the protocols which are on the GCSE specification, along with a very short explanation of what each stands for, is responsible for and how it may be used.

You shouldn’t need to know any more than this…

TCP/IP

What does it stand for? Transmission Control Protocol/Internet Protocol

What is it? A “suite of protocols” which define how internet connections are created and managed. TCP/IP refers to the two main protocols TCP, which is responsible for establishing connections between devices and ensuring reliable delivery of data and IP which is responsible for splitting data into packets and routing them through the internet to their destination.

TCP/IP actually contains many more protocols, organised into four conceptual layers, which provide all of the functionality we expect from an internet connected device such as being able to transmit and receive web pages, emails or even synchronise the time between devices.

Anything else I need to know? Everything in the section above which explains TCP/IP and layers in more detail!

HTTP

What does it stand for? Hyper Text Transfer Protocol

What is it? The protocol responsible for establishing a connection between your web browser and a web server and transmitting website data from server to client. Web browsers send HTTP requests for a website to a web server, when these are received, a response along with the web page itself is returned from the server to the client. HTTP requests and responses are numerical and classified according the the first number. You may well have seen some of these when a website fails to work, such as “404 – Not Found” when a page has been removed or “500 – Server Timed Out” when there is an issue establishing a connection in time.

Anything else I need to know? HTTP is a plain text protocol, meaning all data sent between client and server is not encrypted in any way. if it is intercepted, then it can be immediately understood. HTTP uses URL’s (Uniform Resource Locators – website addresses) to locate web servers. These URL’s must be translated into an IP address through the use of another protocol – DNS!

HTTPS

What does it stand for? Hyper Text Transfer Protocol – Secure

What is it? HTTPS is an extension to the HTTP protocol, meaning it is completely identical in many ways except one – data is sent in a secure manner through the use of encryption. HTTPS establishes a secure connection to a web server using yet another protocol called “TLS” which stands for Transport Layer Security. TLS encrypts traffic sent between client and server which prevents a third party from understanding any data they may intercept.

Anything else I need to know? Without HTTPS, it would not be possible to use the WWW securely. Every day activities such as shopping, using online banking or logging in to any website for any reason rely on HTTPS to not only protect the user names and passwords sent between client and server, but also the sensitive data that may then be subsequently transmitted

FTP

What does it stand for? File Transfer Protocol

What is it? A protocol designed purely for the sending and receiving of files between a client and a server. OCR are very clear that they want you to say it is a client server model in your answers! FTP actually creates two connections between a client and server, one for control signals (requests, sign in, out etc) and one for the actual data transfer. All data, log in information and control commands are sent in plain text.

Anything else I need to know? FTP has been phased out of use during recent years and most modern web browsers no longer support it due to multiple security concerns and better methods of file transfer emerging. Alternatives such as Secure FTP (FTPS) and SSH FTP (SFTP) are now used.

POP

What does it stand for? Post Office Protocol

What is it? POP is used exclusively to connect to email servers and to retrieve email. To be clear, POP only gets email from a server and then deletes the server copy, it does almost nothing else. POP is an extremely simple protocol to implement and is still in use today despite being one of the earlier protocols to be created. POP stores email locally, on the client computer.

Anything else I need to know? POP cannot be used to send emails. POP has been developed to allow encrypted connections to email servers.

IMAP

What does it stand for? Internet Message Access Protocol

What is it? IMAP is another protocol used for the retrieval of email from a server, however it is more fully featured than POP. Whilst POP will connect, retrieve mail and then disconnect from a server, IMAP can maintain a connection to the email server, meaning if new email arrives it can automatically be fetched. IMAP contains features which allow the use of folders in an email inbox, multiple users are able to access the same mailbox on a server and it also provides support for monitoring the state of mail messages such as read, unread, high priority and so forth.

Anything else I need to know? IMAP is not responsible for the sending of email messages!

SMTP

What does it stand for? Simple Mail Transfer Protocol

What is it? SMTP manages the sending of email. It is called an “outgoing” protocol to reflect the fact that it is responsible for taking email messages from a client and sending them to an email server to be delivered or forwarded on to another mail server. SMTP, therefore, is the protocol which governs mail being sent from client to server, or from mail server to mail server.

Anything else I need to know? SMTP supports secure connections and encryption as standard.

Protocols – A Quick Reference Summary